Careening towards destruction

Watch this video and then read on….

Think about the calculations that need to be made to avoid the obstacles put before the robot, ensuring that it never trips, it never crashes, it never falls over. They are goals even humans cannot achieve. Now imagine a humanoid robot with the ability to jump, run and dodge faster than any human. Start by asking it to play chess, then put it on a sports field for entertainment. Shortly after that, arm it, and call it a protector or defender. Now see the technology hacked and stolen by a foreign enemy. Or add artificial intelligence that makes the soldier ‘autonomous’ and superior to the enemy’s.

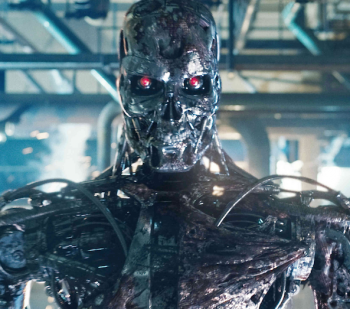

Is the technology in this robot ‘cheetah’ designed to help driverless cars avoid collision or reduce the cost of transport by removing human drivers? Yes. But could it also be a step towards terminator like weaponry? Yes.

Ask a mountaineer why they crest the world’s tallest peak and they respond ‘because its there’. Robotics and AI engineers have, perhaps blindingly, adopted the same attitude. ‘We do it because it’s there.’ In another step on the presciently scripted road – warned of by Bill Gates, Elon Musk and Stephen Hawking no less – tech whizzes are now advancing the technology needed for the good of mankind but which may one day destroy it.

History is not devoid of examples of technological developments that were driven by good intentions and societal advancement, but were subsequently corrupted. Nuclear fission comes immediately to mind.

In January, Tesla and SpaceX founder, Elon Musk, donated $10 million to the Future of Life Institute (FLI), a group dedicated to the prevention of risks associated with the development of artificial intelligence.

But attempting to ensure that automated systems will still continue to listen to and obey humans in the future, seems naive.

Earlier in the year the FLI has also released an open letter for the “continuous control” over artificial intelligence, as the technology may one day grow beyond the control of the humans that developed it.

The letter was signed by industry experts such as Demis Hassabis, Shane Legg and Mustafa Suleyman, who are the co-founders of British AI company DeepMind that was acquired by Google in 2014. It was also signed by Elon Musk and Stephen Hawking. A copy of the letter in PDF is available for download here.

But one cannot help think of mountaineers ‘doing this for good’ when one reads in the letter; “Success in the quest for artificial intelligence has the potential to bring unprecedented benefits to humanity, and it is therefore worthwhile to research how to maximize these benefits while avoiding potential pitfalls.”

To discover just how determined vested interests are to climb this mountain one only needs to immediately ban all further robotic and AI development. The subsequent outcry would be heard in the furthest reaches of the ‘omniverse’, confirming that momentum is already too far advanced to be contained.

Roger Montgomery is the founder and Chief Investment Officer of Montgomery Investment Management. To invest with Montgomery, find out more.

garry howlett

:

A simple story form professor Margaret Boden explains why it will be difficult for A1 to ever obtain a human intelligence level.

The fisherman goes fishing and catches a beautiful fish, who as he is being dragged into the boat cries “I don’t want to die, throw me back into the water and I will grant you 3 wishes”.

The fisherman thinks and starts to make his wishes.

wish 1/ My son is at war and I haven’t seen him for a long time, can you please bring him home?

wish 2/ We have little money so can you give us $50k.

wish 3/ I am not sure about the 3rd wish, can I let you know tomorrow?

The fish agrees and he is thrown back into the water. The fisherman goes home and moments later a knock on the door and they bring his son back into the house dead in a coffin. 10 mins after this another knock on the door and its the postman with a letter and a cheque inside for $50k from the life insurance company.

Substitute the fish with the A1 machine and you get the point.

Oh and the 3rd wish granted was to please undo the first 2 wishes.

xiao fang xu

:

what are probability/odds/chance:

of comets and asteroids hitting earth (size that wiped out dinosaurs)

of volcano types Krakatoa exploding

of WW3 ( USA vs Russia or China )

of The Black Death type epidemic

of AI destruction of humans

in next 100/200/300..5000 years ???

colin jones

:

I cannot really see a problem. This is evolution. A technologically superior product is spawned by the human race and two possibilities emerge. Firstly, the race becomes extinct. The neanderthals and Denisovans disappeared 40,000 years ago. The trilobites lasted 100’s millions of years. Overall this is progress of a significant kind. Secondly, the human race coexists in a manner determined by the robots. Probably better than coexisting with some of the monsters humans have had (Hitler, Stalin,etc) and probably better managers than the current crop!

Roger Montgomery

:

oh boy!

Robert Summers

:

Roger,

Many highly touted ‘disruptive’ technologies have been subject to similar concerns. Consider the claims made surrounding the Human Genome Project from those most closely involved (‘the next step in human history’, ‘more significant than the invention of the wheel’, etc) and the following public and political concerns of the impact of human cloning and genetic engineering technologies. Not sure about you, but I haven’t heard much concern about those issues in a long time. Nearly a decade and a half on, and human medicine has not been able to escape from Eroom’s Law (the reverse of Moore’s law: doi:10.1038/nrd3681) – productivity in developing new therapeutic treatments is declining (let alone the step-change advance that was predicted). See the below NYTimes article for example:

http://www.nytimes.com/2014/11/16/magazine/why-are-there-so-few-new-drugs-invented-today.html

The much-heralded Nanotech revolution would provide a similar example. Reality, at its margins, has the bad habit of being more complicated than we first thought. In the mean time, regulations moved quickly in most countries to prohibit the more morally contentious research agendas once they entered public debate (much to the chagrin of some in the research community).

In my opinion artificial intelligence and the ‘big data revolution’ are also likely to be subject to the same over-confidant predictions from insiders. Consider for example the failings of Google’s Flu trends:

http://gking.harvard.edu/publications/parable-google-flu%C2%A0traps-big-data-analysis

I’m as big a supporter of using predictive models to inform human decisions as the next man, but one must recognise that even the best supercomputer-based algorithms are just that – predictive/associative models based on a) supplied computing power, b) supplied algorithms and c) supplied data (quality of data cannot be under-emphasised).

By the same token, time and time again humans have demonstrated an inability to fully recognise the long-term consequences of our actions, particularly when it comes to technology and complex systems. Sure, in time the robots might get us, but only if energy, water and food insecurity, climate change, financial crisis, political instability and microbial drug resistance don’t get to us first.

Roger Montgomery

:

Thanks Robert. “time and time again humans have demonstrated an inability to fully recognise the long-term consequences of our actions”

Lucien Heffes

:

This is a link to an incredible robotic prosthesis to replace severed legs. I saw the TV documentary a while back. I seriously did not know that the subject was a double amputee until he told the interviewer. I can’t remember the name of the doco.

http://www.smithsonianmag.com/innovation/future-robotic-legs-180953040/?no-ist

Lucien Heffes

:

It’s a conundrum. AI and robotic technology could lead to the development of an effective exoskeleton for paralysed people. Amongst many other things, there has been incredible development in artificial prostheses for severed limbs. On the other hand, Terminator or Asimov iRobot type machines may be less than a generation away. I guess we’ll deal with it at the time, as long as they don’t look like Arnie!